Apple Card’s algorithm is sexist and confusing

Tribune News Service

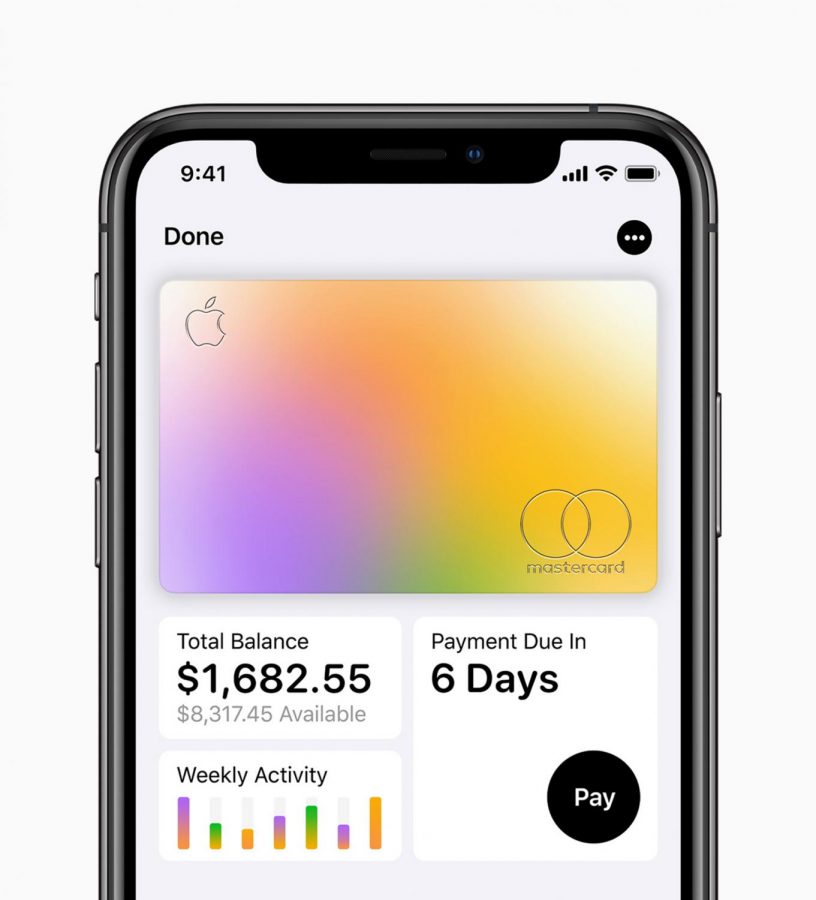

The Apple Card will act much the same as the Apple Wallet, but it’s also a physical card you can carry in your wallet. (Apple/TNS)

It was only a matter of time until Apple—our technology overlords—launched their own credit card. It is another pretty and shiny piece of metal, but probably the most colorful. I will admit I was intrigued by it, and part of me really wanted one. Thankfully my 21-year-old conscience came to the forefront and reminded me I am still in college and do not really need a credit card.

Even if I had gotten it, I probably would not have gotten as much of a credit limit as say, my boyfriend, who did end up applying and getting an Apple Card.

The algorithm associated with the Apple Card is being called sexist.

Algorithms are supposed to make our lives easier and bypass the inherent racial and sexual biases of humans, yet technology has failed us.

David Heinemeier Hansson, a web developer, took to Twitter recently to voice his complaints about the sexist nature of the Apple card.

“The @AppleCard is such a fucking sexist program. My wife and I filed joint tax returns, live in a community-property state, and have been married for a long time. Yet Apple’s black box algorithm thinks I deserve 20x the credit limit she does. No appeals work,” Hansson posted.

According to Hansson’s later tweets, his wife also has better credit than him. Despite that, he got a higher credit limit than her.

Hansson is not the only one alleging the Apple Card of being sexist. Even Steve Wozniak, an Apple co-founder, has complained about this problem.

Here is where the problem gets even juicier: Apple does not even run the card, despite boasting on its website that Apple Card is “a new kind of credit card. Created by Apple, not a bank.” When you go to apply for the card, you find out that the bank Goldman Sachs is in charge and issues the cards.

Although, neither Apple or Goldman Sachs has made comments in regards to making an appeal according to the Los Angeles Times.

So, how many people need to complain until they do something?

As of Nov. 9, 2019, The New York Department of Financial Services (NYDFS) is looking into these allegations.

Linda Lacewell, NYDFS’s superintendent took to Twitter to announce this.

“Any algorithm that intentionally or not results in discriminatory treatment of women or any other protected class violates New York law,” a DFS spokesperson said in a statement. “DFS is troubled to learn of potential discriminatory treatment in regards to credit limit decisions reportedly made by an algorithm of Apple Card, issued by Goldman Sachs.”

Part of the problem of this issue lies in the little transparency involved in the algorithms decision-making process.

Most of us—myself included—feel that knowing how algorithms work is far out of our understanding reach. And with the lack of transparency, understanding them does not become any clearer.

Algorithms are supposed to decrease and exclude instances of biases, whether they be racial, sexual or any other kind, but there needs to be an improvement in how they function.

Apple is not the only case where algorithms have failed us. Algorithms used in the health care system have also had problems when it comes to racial biases, despite being put in place to get rid of these biases.